Sunday, 15 February

13:00 EST

Celebrity Ascent Southern Caribbean cruise review [Philip Greenspun’s Weblog]

This is about a January 2-12, 2026 trip on the Celebrity Ascent from/to Fort Lauderdale via the following ports:

- Tortola, British Virgin Islands

- St. Johns, Antigua

- Barbados

- St. Lucia

- St. Kitts

TL;DR: It’s a big ship, but you feel like family. The officers and staff are warm and friendly. The food is much better than on Royal Caribbean. The ship orchestra and the house band (Blue Jays with Jessica Gabrielle) were superb.

The Machine

Celebrity Ascent was completed in 2023 by Chantiers de l’Atlantique at a cost of $1.2 billion and holds about 3,300 passengers on a typical cruise, plus 1,400 crew. She’s notable for having a “Magic Carpet” that can slide up and down the ship, serving as a restaurant or bar most of the time, but also an embarkation platform for the ship’s tenders at ports where there isn’t a pier.

I don’t think she’ll win any beauty contests, but Ascent is very functional! In St. Lucia:

Note that there is no place on board to land a helicopter. If someone gets sick and needs to be evacuated, only the Coast Guard or one of the private contractors that the Europeans like to use can extract someone from the ship with a hoist.

Despite the potential of Starlink, Internet service actually provisioned was too slow for work (see Celebrity Starlink Wi-Fi Internet (3 Mbps at $1,000 per month)).

With 73,000 hp of Wärtsilä diesel power (five engines total), I’m not sure that Greta Thunberg will want to be a customer. That said, the hull design is 22 percent more fuel efficient than older ships. How is it possible to advance the art of naval architecture, already relatively mature during the Second Punic War (2,250 years ago)? The efficiency doesn’t come from an improved hull shape, but from pushing air out at the bow and, thus, enabling the ship to ride on a cushion of air rather than clawing at the draggy water. Prof. Dr. ChatGPT, Ph.D. Naval Arch. explains:

Modern cruise ships sometimes use air lubrication systems (ALS) that pump compressed air through tiny openings in the hull—usually along the flat bottom.

1. Reduced Skin-Friction Drag

- Water is ~800× denser and far more viscous than air.

- Replacing direct water–steel contact with air–water contact drastically lowers friction.

- Skin friction accounts for 50–80% of total resistance at cruise speeds.

2. Lower Fuel Consumption

Typical real-world savings:

- 5–10% fuel reduction on large ships

- Sometimes higher on wide, flat-bottomed hulls (like cruise ships)

Many modern ships include air lubrication, including vessels from:

- Mitsubishi Heavy Industries

- Wärtsilä

- Silverstream Technologies

Some notable cruise lines have retrofitted ALS systems to existing ships to improve efficiency.

Air-bubble (air-lubrication) systems have a longer history than most people realize—they date back over half a century, but only became practical for cruise ships fairly recently.

Air lubrication always worked—but it needed:

- Cheap, efficient electrical power onboard

- Sophisticated control software

- Environmental pressure (fuel cost + emissions)

- Better hull designs to keep the air where it belongs

Cruise ships finally ticked all the boxes.

The Stateroom

Booking about three weeks before departure we got literally the last room available on the ship, other than an inside cabin. We had a Concierge Class 285-square-foot stateroom including the veranda, which ends up becoming part of the room because of the top glass panel’s ability to slide vertically. It’s a clever design. Our room was laid out like the photo below, except that we had the two halves of the bed split with a night table in between. We could have used outlets on both sides of the bed, but found an outlet on only one side. The bathroom felt spacious.

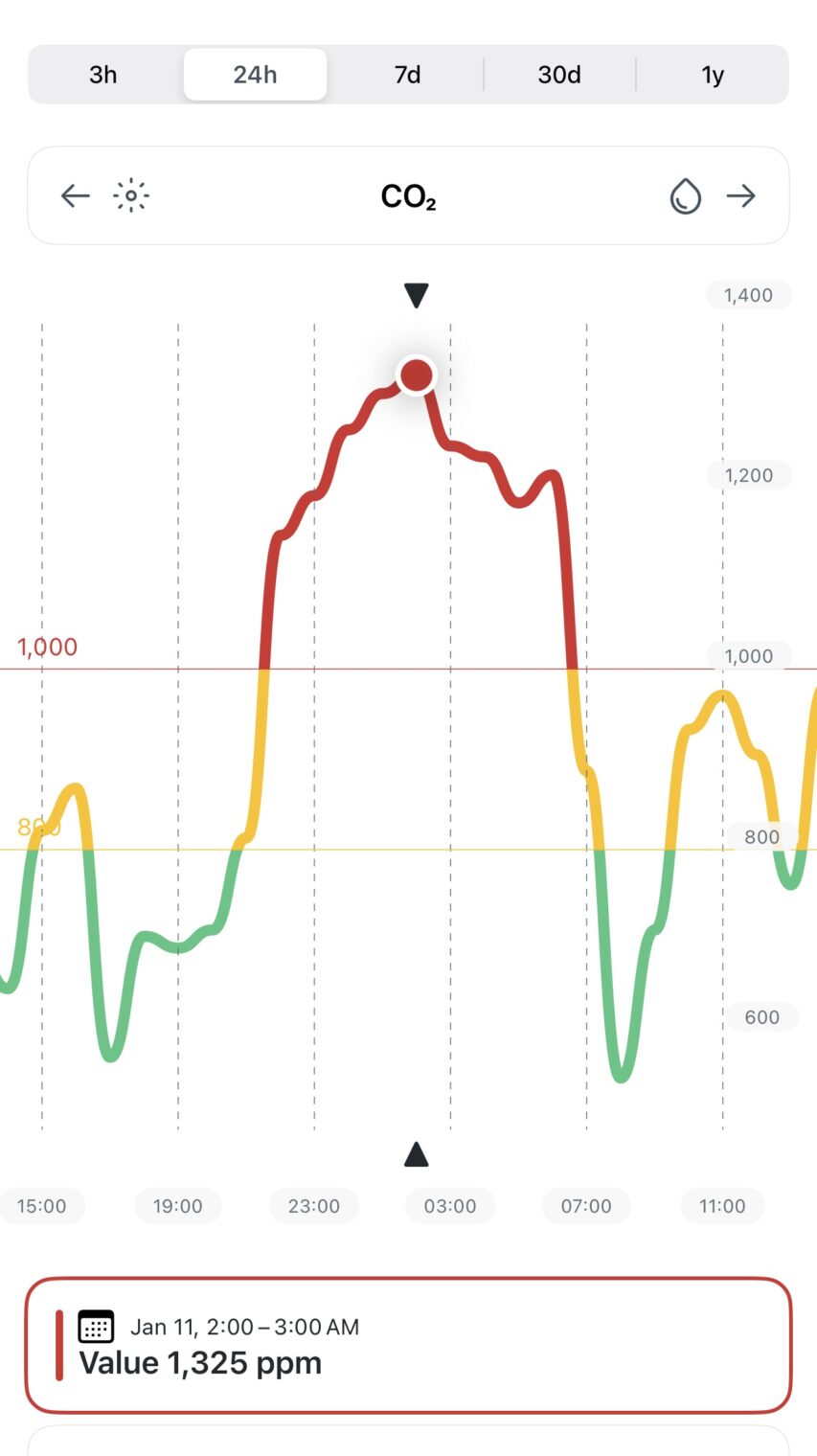

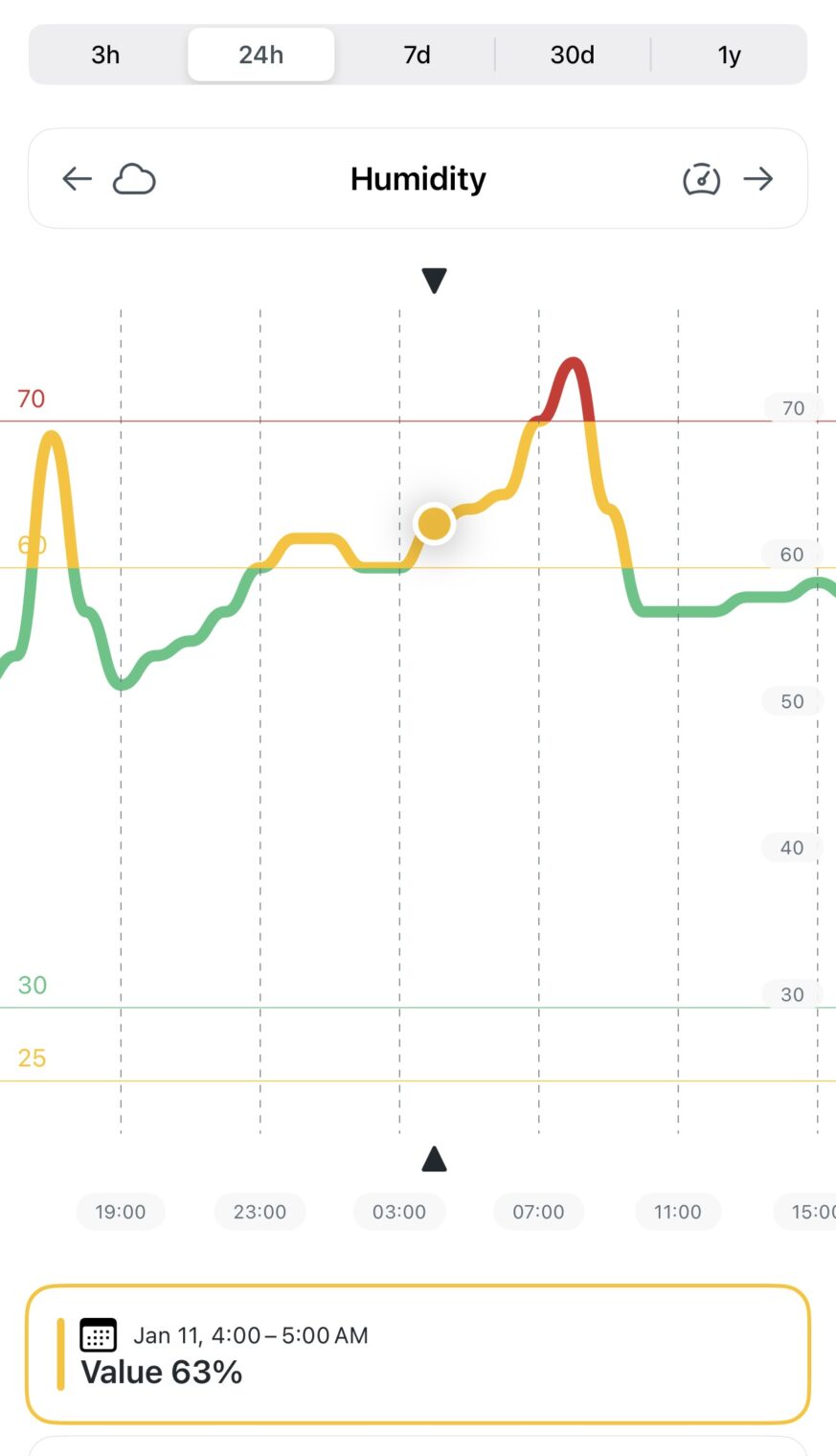

The in-room HVAC doesn’t dehumidify as much as one would expect, nor does it bring significant fresh air into the room when the veranda window is sealed. Humidity without the window open would range from 50-65% (how do they avoid mold?) and CO2 levels in the middle of the night would go over 1,300 ppm (a real nightmare for Greta Thunberg!). Data from an Airthings Wave Enhance:

(I’ve seen CO2 go to 1,000 ppm in some hotels in humid environments, such as Miami. The ASHRAE standard is 800-1,000 ppm. CO2 by itself isn’t harmful (up to 5,000 ppm is tolerated in submarines), but is an indication of how much fresh air is coming in. Atmospheric CO2 is about 430 ppm. In my old Harvard Square condo (crummy 1880s construction) with just one person in the bedroom (me), the CO2 level reached 700 ppm in the middle of the night.)

The Passengers

Typical passengers seemed to be the same kinds of folks who would move into the The Villages (the most active over-55 active community in the U.S.?). Here are a couple of brothers who were, I think, traveling with their parents (flamingo suits from Amazon):

Exercise on Board

There is a beautiful and never-crowded gym on Deck 15 looking straight out at the sea in front of the ship:

Ascent lacks the “walking/jogging track all the way around Deck 5” that was a conventional exercise solution on older ships and instead has a bizarre serpentine track on (crowded) Decks 15 and 16 that is also used by people getting to and from lounge chairs. The lack of the conventional all-around-the-ship track was my biggest disappointment, which I guess means that everything else was at least pretty good!

Here’s the track. Notice that it isn’t shaded, unlike the typical round-the-ship track, and it is surrounded by clutter and people. (The Magic Carpet is in the background in its higher position.)

Food

The food is a significant step-up from what’s offered on Royal Caribbean, the parent company of which acquired Celebrity in 1997. This is good and bad, I guess, I lost weight during every Royal cruise and gained some weight on this Celebrity trip.

One important source of weight gain was that, unlike almost anyone in the U.S. and certainly unlike anyone on Royal, the baker for Ascent was able to make a high quality croissant. These were hard to resist at breakfast. (Fortunately, they were just as bad as Royal at making donuts! The worst Dunkin’ does a better job.) Then at about half the other meals in the buffet they had addictive bread pudding. There was always an option for Indian food at the buffet (4 or 5 dishes plus bread) and typically at least two or three other Asian choices.

A friend who owns some superb restaurants did the Retreat class on Celebrity and said that the dedicated restaurant for those elite passengers exceeded his expectations. We hit the specialty steak restaurant on Ascent and were somewhat disappointed. They can’t have a gas grill on board for safety reasons and, apparently, don’t know how to use induction and a cast iron pan. The steaks are, therefore, rather soggy. We ate in the main dining room and buffet restaurants after that.

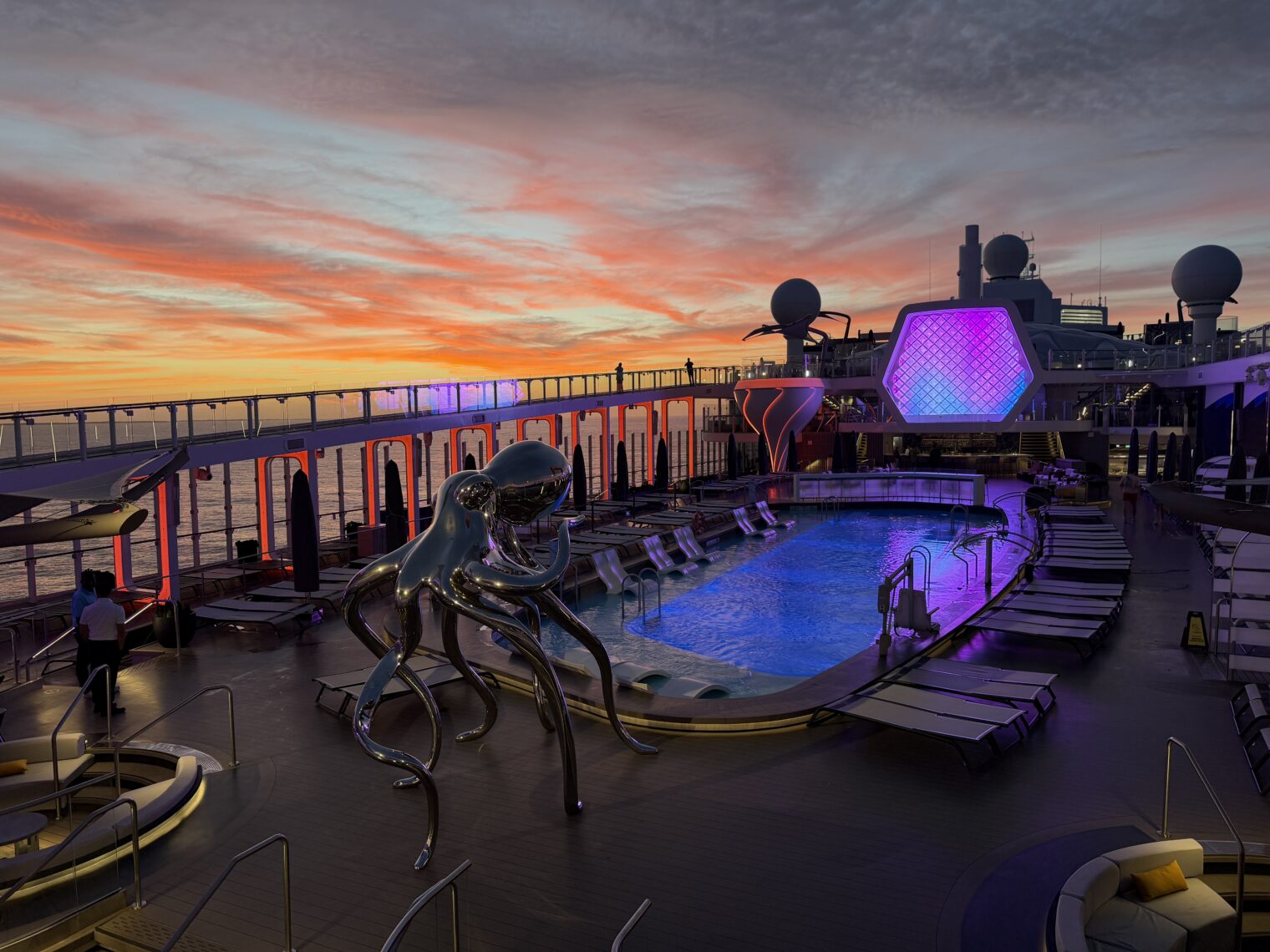

The Pool

There’s an indoor solarium pool for Alaska and European cruises. Here’s the outdoor pool (big enough for water aerobics and kids to goof around; not really big enough to swim for exercise (though it emptied out towards sunset so maybe one could)):

Still open and empty because everyone is dressing for dinner?

There are some hot tubs, but they’re not quite hot enough (i.e., you could comfortably sit in one for an hour):

The Spa

If you’re doing an Alaska cruise it probably would make sense to pay for Aqua Class, which includes access to these heated loungers looking out at the sea (not all that appealing on a Caribbean cruise!). The SEA Thermal Suite:

Sports under the Stars

Our cruise coincided with NFL playoffs and people enjoyed the big screen experience in the “Rooftop Garden”:

Entertainment

The resident musicians, singers, and dancers were all great. I personally wish that cruise lines would do full plays or musicals rather than assemble songs from disparate sources and string them together, but apparently I’m a minority of one and attention spans dictate that shows last for just 45 minutes. Some of the guest stars were fantastic, notably Stephen Barry, an Irish singer with a fun attitude. Steve Valentine did a mind-bending Vegas-quality magic show. The technical aspects of the theater were up to Broadway standards or beyond.

Some of my favorite shows were ones where the ship’s orchestra got together with one of the singers from a smaller group and just played music. I’m more of a classical music fan, but the high level of talent live was compelling.

For Kids

There is a small Camp at Sea for kid kids, which some of the youngsters on board seemed to like. My 16-year-old companion rejected the Teen Club, finding only boys playing videogames.

Unlike on Royal Caribbean, there weren’t many under-18s on board. That said, I never saw a child or teen who seemed bored or unhappy. They were loving the food, the scenery, the pool, etc.

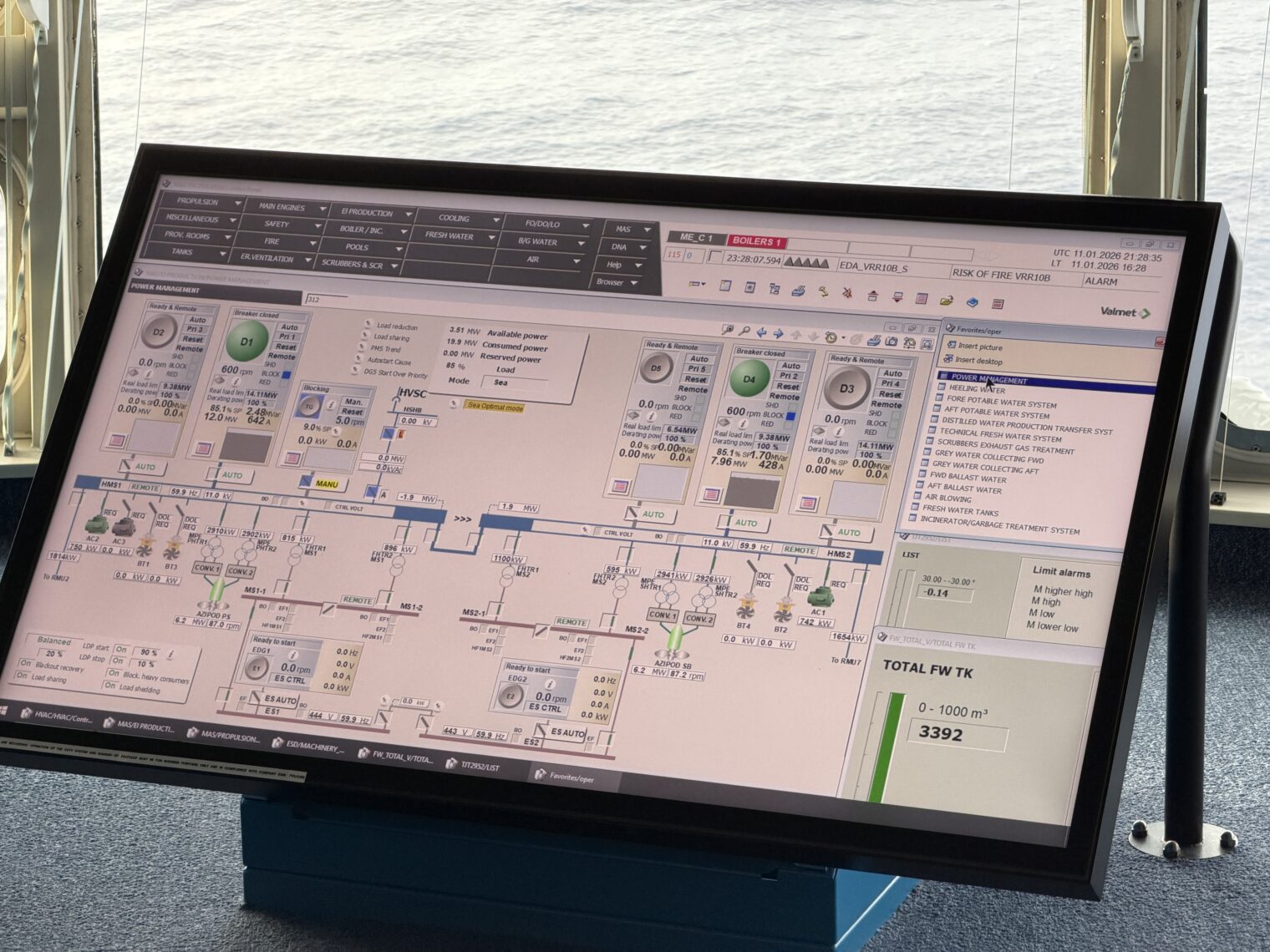

The Bridge

The bridge is worth seeing. It’s a masterpiece of ergonomics. Apparently, the captain takes direct control of the Azipods when docking. I had expected a joystick and a computer to figure out what to do with the bow thrusters and the Azipods, but that’s not how it is done.

The Dancers (Bear+Woman)

Art imitates life (“Based on May 2024 surveys, approximately 31% to 37% of women in the US and UK indicated they would prefer to be alone in the woods with a bear over a strange man, with higher rates among younger women (up to 53% for 18-29 year olds in the UK).”):

Conclusion

The whole trip cost about $8,000 including all of the extras, such as Internet and a couple of shore excursions, but no drinks package and only a few extra-cost drinks. We could have done it for less if we’d booked farther ahead or chosen a more basic room. It worked out to $800 per day for great scenery, fun entertainment, more food than I should have eaten, and an introduction to five islands, three of which were entirely new to me and the other two that I hadn’t visited for more than 20 years.

I will remember the warmth of the Celebrity crew. Everyone seemed genuinely interested in welcoming and taking care of us.

[For the cruise haters: We could have flown to a Caribbean from FLL, stayed in a hotel, picked restaurants, and flown back, for about the same price (or 50 percent more for the same level of luxury?). The boat ride itself has value to me, however. I love to be on deck when arriving or departing. It’s a different kind of understanding of how the Caribbean is put together geographically and culturally than one might get from being airdropped by Airbus A320.]

The post Celebrity Ascent Southern Caribbean cruise review appeared first on Philip Greenspun’s Weblog.

12:00 EST

Fake Job Recruiters Hid Malware In Developer Coding Challenges [Slashdot]

"A new variation of the fake recruiter campaign from North Korean threat actors is targeting JavaScript and Python developers with cryptocurrency-related tasks," reports the Register. Researchers at software supply-chain security company ReversingLabs say that the threat actor creates fake companies in the blockchain and crypto-trading sectors and publishes job offerings on various platforms, like LinkedIn, Facebook, and Reddit. Developers applying for the job are required to show their skills by running, debugging, and improving a given project. However, the attacker's purpose is to make the applicant run the code... [The campaign involves 192 malicious packages published in the npm and PyPi registries. The packages download a remote access trojan that can exfiltrate files, drop additional payloads, or execute arbitrary commands sent from a command-and-control server.] In one case highlighted in the ReversingLabs report, a package named 'bigmathutils,' with 10,000 downloads, was benign until it reached version 1.1.0, which introduced malicious payloads. Shortly after, the threat actor removed the package, marking it as deprecated, likely to conceal the activity... The RAT checks whether the MetaMask cryptocurrency extension is installed on the victim's browser, a clear indication of its money-stealing goals... ReversingLabs has found multiple variants written in JavaScript, Python, and VBS, showing an intention to cover all possible targets. The campaign has been ongoing since at least May 2025...

Read more of this story at Slashdot.

11:00 EST

Analysis of JWST Data Finds - Old Galaxies in a Young Universe? [Slashdot]

Two astrophysicists at Spain's Instituto de Astrofísica de Canarias analyzed data from the James Webb Space Telescope — the most powerful telescope available — on 31 galaxies with an average redshift of 7.3 (when the universe was 700 million years old, according to the standard model). "We found that they are on average ~600 million years old old, according to the comparison with theoretical models based on previous knowledge of nearby galaxies..." "If this result is correct, we would have to think about how it is possible that these massive and luminous galaxies were formed and started to produce stars in a short time. It is a challenge." But "The fact that some of these galaxies might be older than the universe, within some significant confidence level, is even more challenging." The most extreme case is for the galaxy JADES-1050323 with redshift 6.9, which has, according to my calculation, an age incompatible to be younger than the age of the universe (800 million years) within 4.7-sigma (that is, a probability that this happens by chance as statistical fluctuation of one in one million). If this result is confirmed, it would invalidate the standard Lambda-CDM cosmological model. Certainly, such an extraordinary change of paradigm would require further corroboration and other stronger evidence. Anyway, it would be interesting for other researchers to try to explain the Spectral Energy Distribution of JADES-1050323 in standard terms, if they can ... and without introducing unrealistic/impossible models of extinction, as is usually done. The findings are published in the journal Monthly Notices of the Royal Astronomical Society.

Read more of this story at Slashdot.

Photos: The flying doctors of Lesotho won't let their wings be clipped [NPR Topics: News]

This band of airborne health workers bring essential medical care to isolated communities in the southern African nation. In addition to turbulence, they face a new obstacle: budget cuts.

(Image credit: Tommy Trenchard for NPR)

Rockstar athletes like Ilia Malinin often get 'the yips' at the Olympics. It can make them stronger [NPR Topics: News]

Ilia Malinin's painful falls at the Milan Cortina Games follow in a long tradition of great U.S. athletes who get the "yips" or the "twisties" during the Olympics.

(Image credit: Francisco Seco)

U.S. Alpine skier Mikaela Shiffrin finishes another Olympic race without a medal [NPR Topics: News]

U.S. Alpine skier Mikaela Shiffrin looks unstoppable everywhere except the Olympics. She's running out of chances to medal at the Milan Cortina Games.

(Image credit: Marco Trovati)

Maybe figure out the meaning of life over Spring Break? [Pharyngula]

My wife got me the perfect Valentine’s Day card.

I’m afraid I got her nothing. I had a severe flare-up of my back injury, and spent much of Valentine’s Day lying in an emergency room experiencing such intense agony that I was certain that I was going to die. Now it’s the day after, I didn’t die, but I’m now covered in patches and doped up on Valium. My response to my recovery was “Oh no, now I’ve got to prepare a week’s worth of lectures that include a whole lot of in-class problems, and I’ve got to make sure the lab crosses are on track,” so I’ve spent Sunday morning frantically updating lectures and sending notes to the students under the assumption that today was Monday and I needed to be ready for my 12:45 class.

I somehow moved from imminent fear of death to imminent fear of missing an hour of class is a serious long term concern over priorities to work over in my brain. I’ll put it on my list of things to get done this week. After I get through classes and labs.

10:00 EST

Before You Buy AI for Your Campus, Read This [Rhetorica]

Does your institution need to buy AI? Will students even use it? Just because students use generative AI tools like ChatGPT on campus does not mean that the technology is inherently designed for educational purposes. One of the more striking things you notice as you begin to explore the multitude of AI tools is how little they differ from their public offerings. Most come bundled with data protection and little else to distinguish that an educational AI license is functionally any different than the plans students have for free.

We’ve heard for the last three years that faculty have to rethink teaching and assessment in the wake of AI, but what is really lost in this discourse is how universities will need to change how they think about their existence because of machine intelligence. We may not need to purchase AI or be able to create customized pathways for degrees around a technology that changes so rapidly so quickly, so what then is the role of a university?

Struggling to Sell What You Already Gave Away for Free

In What to ask when AI vendors show up on your campus, gets right to the tension and growing disconnect that faculty have when AI vendors arrive on campus trying to sell products. Sometimes these pitches are institutional adoption campaigns in the flavor of CSU buying system-wide access to OpenAI’s ChatGPT. While many others are attempting to sell individual subscriptions directly to students and sometimes to faculty.

Conrad’s excellent ethical considerations are ones faculty and even students should ask representatives of these companies when they show up. As Conrad notes in her introduction, very rarely are faculty included in these institution-wide conversations about purchasing AI licenses for their campus:

As a few of my fellow critical AI colleagues have noted, even just getting reps (or administrators) to answer the question of what they mean by “AI” can be quite revealing. But in an environment in which you may be the only person asking (and I’d encourage you to get at least one other colleague to join you in asking questions, which can encourage others to speak up), you might want to pick a question that will get others thinking about the answers that are (or aren’t) provided.

I’d like to build off of these questions for a different, but related, audience that may not be swayed by ethical concerns alone. Here are three practical questions I’d encourage CIOs, Provosts, University Presidents or other decision makers to begin asking said vendors when they arrive before purchasing any AI product.

1. What evidence do you have that students will stop using the free version of AI tools and start using institutional licenses if they are purchased?

Conrad notes representatives pitch campus-wide AI adoption initiatives by leaning heavily into notions about equity and access. That’s the sale’s pitch, and it is beginning to wear thin because students aren’t willing to ditch their free AI plans. These arguments come bundled with data protection, mentions of secure access, HIPAA, and FERPA protection. That’s the language Edtech providers have been using for decades to sell institutions their products. The problem is that students have little interest in using AI tools that their campuses provide for them when they already have the free equivalent. Some of the reasons for this are clear and inescapable from how these tools are viewed culturally right now.

-

Students in high school and throughout higher education don’t trust that their use of institutionally provided AI tools won’t be monitored or linked back to them if they use AI inappropriately in a class. They prefer to use their own AI, and that largely remains ChatGPT.

-

Students don’t see the need to learn a new tool or interface that might be different than ChatGPT. Gemini, Claude, Anthropic, Grok, Perplexity—join countless wrapper apps that have been sold to schools and universities but see significantly less usage by students.

-

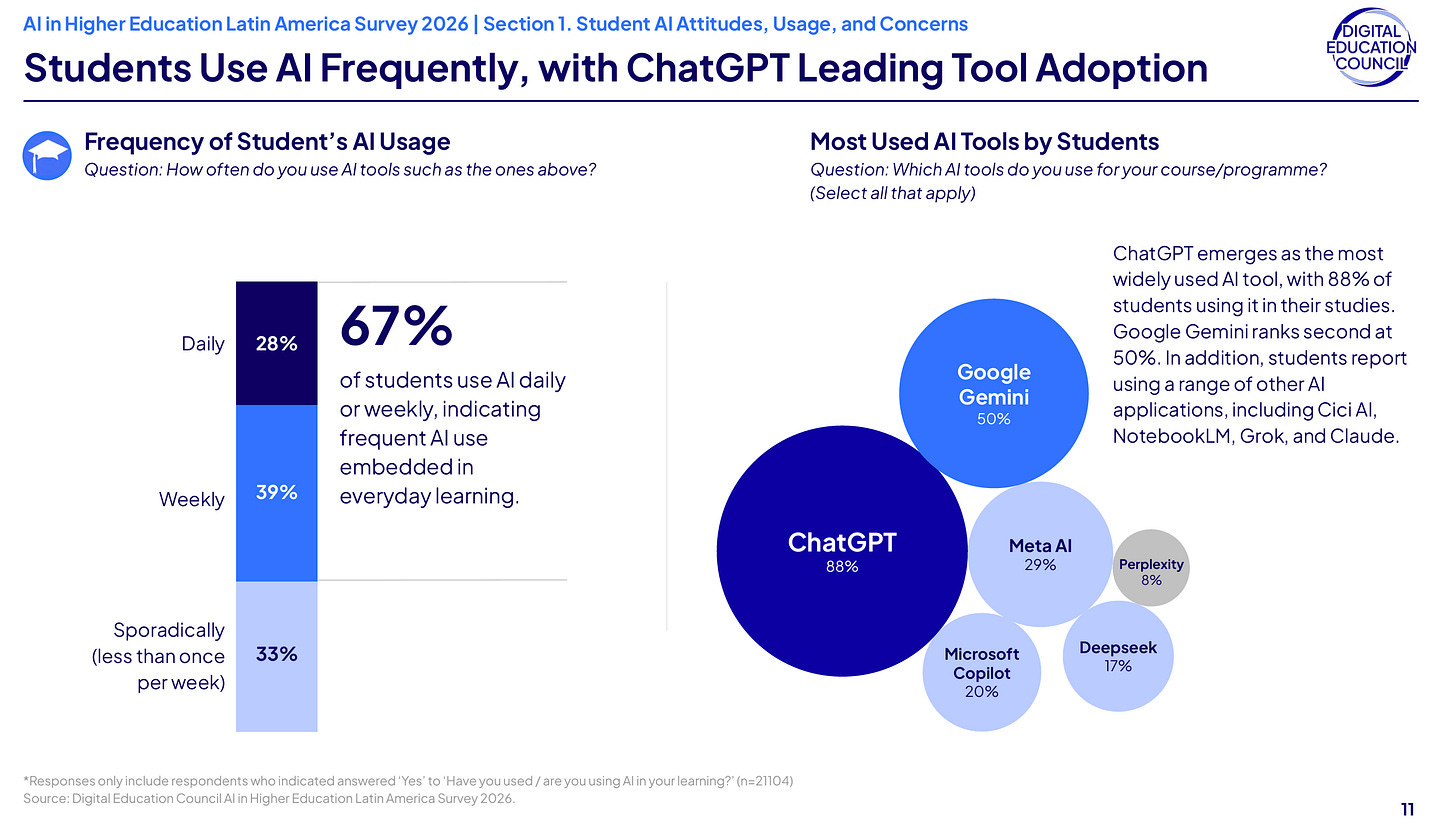

Often, the free version of ChatGPT is all students have used or have any interest in using. I fear many have falsely equated student use of one AI tool as a sign that students are even interested in learning anything about how AI works or exploring alternatives. Working with students closely using AI these past three years and I can say with some confidence that very few are deeply interested in using a different AI tool than the one they already use. That’s not just in the USA. The Digital Education Council’s recent 2026 survey about AI use throughout Latin America reveals ChatGPT remains the tool of choice for students:

Arguments about equity, access, and data security don’t hold up well if students refuse to stop using the free versions of AI. Universities cannot and likely will not continue to pay expensive fees for AI licenses that are only used by students infrequently when faculty assign them and specifically instruct students to use them. AI developers have so thoroughly saturated the market with free AI that most users don’t see the need to purchase a $20 per month subscription to gain more access to advanced features. Heck, ChatGPT’s free tier gives most anyone limited access to the most advanced features on a rolling basis.

It’s a self-inflicted irony that vendors selling AI products can’t sell a repackaged version of their free AI by putting an educational wrapper around their product. The free version adopted so heavily by students has become the default for an entire generation. If developers altered how these tools functioned by making it more difficult for students to use or placing more guardrails around usage, then students will all but certainly stop using these tools and search for alternatives that let them breeze through their course work.

2. Why should universities purchase AI education plans if students can use agentic AI to bypass them?

A fundamental blind spot many AI developers have is not recognizing how agentic AI breaks existing systems, including the very Edtech solutions they’re trying to sell. When faculty use AI with students, it is generally as an assistant or in a way that is geared toward teaching students how to use AI to augment an existing skill. These techniques are iterative, require critical thinking, and ethical decision-making. Agentic AI transcends all of that. A student can simply instruct an AI-enabled browser, like Perplexity’s Comet, OpenAI’s Atlas, or Gemini within Google Chrome to complete the task for them. And, yes, this includes having an agentic browser log into another AI tool, even a university-provided one. Imagine trying to teach your students how to use a chatbot interface to help them study, only to discover they’re using an agentic browser to do the assignment for them! Many universities will eventually ask what the point is in purchasing any Edtech right now if it cannot be secured. Anna Mills recently tested Google’s implementation of Gemini within Chrome and found that it would take an exam on her behalf.

What’s really baffling is how little awareness there is on the vendor’s part. I get it. Most of the AI reps that visit campuses are in sales, and they only get a few slide decks and talking points about the product. Still, it is shocking to me that so few of the vendors I’ve spoken with have a sense of the stakes or the actual capabilities of the products they’re trying to sell. If your company makes a product that is released for free, like agentic browsers, that undermines how the expensive educational version of the product you’ve been assigned to sell, I’d expect at least some level of explanation. Instead, it’s often “I didn’t know it could do that.” To me, that’s really alarming!

3. Why should our campus integrate AI tools into degree programs when many companies across sectors are using AI to eliminate junior-level positions?

Outside of the equity and access arguments, the one I hear the most is the need for universities to help students learn how AI works to be professionally ready for jobs that increasingly ask them to use this technology. Those were great arguments in 2023 and 2024, until last year when we started to see certain sectors like coding and industries that rely heavily on entry level work abruptly shift to automate many of those junior positions. The shift isn’t evenly spread across industries or even at certain companies, but once a position is replaced by automation, it rarely reverts back.

Our students are alarmed by this. So, too are faculty. If the trend continues, we will witness a generation of new college graduates entering the workforce without clear pathways to careers. No, it won’t be every job, but it will be spread out across a vast number of white-collar careers that the end result will be nothing short of devastating. The World Economic Forum’s 2025 Future of Jobs report estimates 40% of companies will reduce staff as their skills become less relevant. Entire programs of study may not survive. And those that do will likely experience dramatic and rapid changes that break how traditional college degrees are set up. If students stop seeing college as a potential pathway to enter the professional world, then enrollments will drop, possibly at far greater numbers than we can imagine.

The idea that a university would adopt a technology that could contribute to such a catastrophic future for itself and its graduates is surely the existential question many have asked and will continue to ask. It also adds to the ethical concerns, learning loss, and emerging mental health issues posed by AI that many in higher education and beyond have raised. Many faculty won’t be on board with mass adoption until we get a clearer sense of AI’s role in the workforce. And that likely won’t come for many years. No one knows how AI will play out in the near future, let alone 10 or 15 years down the road. For many, the safe bet is to remain skeptical.

Vendors are going to need to start having responses to these questions and campuses should be prepared to critically engage any supposed solution they provide. The core challenge is how we change our institutional thinking when it comes to AI and start treating it more than a tech purchase.

Led with Values

Higher education doesn’t move at the speed of silicon valley, nor should it. If vendors really want to sell AI, then they need to start addressing each of these questions and the scads more Katie Conrad raised. We hear the marketing that AI isn’t like other tools or innovations. Why then would they expect universities to treat it like a normal purchase and be silent when there are so many practical and ethical considerations?

AI in education has been one of the most contentious and divisive topics of the past decade, so let’s do what we do best as institutions that value critical thinking and public discourse. Let’s teach our students about AI as a topic of critical inquiry! We don’t need to jump into purchasing AI to start considering our response and navigating the opportunities and challenges posed by it. Institutions, like the University of Gonzaga, are making AI part of their core curriculum by putting it in conversation with their institutional values:

As students of a Catholic, Jesuit, and humanistic University, how do we educate ourselves to become women and men for a more just and humane global community?

This question is the anchor for Gonzaga’s core curriculum, a roadmap for all undergraduate students to cultivate understanding, learn what it means to be human, develop principles characterized by a well-lived life, and imagine what’s possible for their roles in the world.

As the core website underscores, “a core curriculum housed in the context of a liberal arts education in the Jesuit tradition offers the most complete environment for developing courageous individuals in any major who are ready to take on any career.”

Ann Ciasullo, director of the University Core, believes that the core is a natural fit for content about AI.

“Because a commitment to inquiry and discernment serves as the foundation of our core curriculum, our students will engage with AI in ways that are both practical and critical. They will not only explore how AI works but also analyze the implications of the content it produces. Given our mission, Gonzaga is in a unique position to frame questions about AI and digital literacy in meaningful ways.”

That aligns deeply with my own approach to teaching students how to navigate AI. But I wonder how alien that may be to some universities that only view AI as a product or an opportunity to lure more graduates with credentials? We must confront some stubborn tendencies on our part that really limit the possibilities, and that’s hard. That’s cultural. And for many, the easier path is to simply buy a tool or service, pass the cost on to students, and forget about it.

What I’d love for campus leaders to consider are ways they can help shape the broader conversation, not by buying something, but instead by charging their campuses to hold a sustained conversation about what place AI has within their institution. It’s time. AI isn’t going away. Asking students, faculty, staff, and administration what role AI should play in the day-to-day operations and classrooms will be the question for most campuses for years to come.

09:00 EST

For U.S. pairs skater Danny O'Shea, these Olympics are 30 years in the making [NPR Topics: News]

Danny O'Shea turned 35 at his first Olympics, after three decades of skating and two reversed retirements.

(Image credit: Elsa)

'Major travel impacts' expected as winter storm watch issued for northern California [NPR Topics: News]

As people travel for the holiday weekend, much of Northern California is under a winter storm watch, with communities bracing for several feet of snow.

(Image credit: Justin Sullivan)

Want a mortgage for under 3% in 2026? Meet the 'assumable mortgage' [NPR Topics: News]

Low mortgage rates from the COVID era might still be attainable for homebuyers, if they find the right house and have the cash.

(Image credit: Rich Pedroncelli)

Brazil's Pinheiro Braathen wins gold, and South America's first Winter Olympics medal [NPR Topics: News]

Once a racer for Norway, Pinheiro Braathen switched to Brazil, his mother's home country. In winning the Olympic giant slalom on Saturday, he earned South America's first medal at a Winter Games.

(Image credit: Rebecca Blackwell)

08:00 EST

"More than two years after the last major 9.1 release, the Vim project has announced Vim 9.2," reports the blog Linuxiac: A big part of this update focuses on improving Vim9 Script as Vim 9.2 adds support for enums, generic functions, and tuple types. On top of that, you can now use built-in functions as methods, and class handling includes features like protected constructors with _new(). The :defcompile command has also been improved to fully compile methods, which boosts performance and consistency in Vim9 scripts. Insert mode completion now includes fuzzy matching, so you get more flexible suggestions without extra plugins. You can also complete words from registers using CTRL-X CTRL-R. New completeopt flags like nosort and nearest give you more control over how matches are shown. Vim 9.2 also makes diff mode better by improving how differences are lined up and shown, especially in complex cases. Plus on Linux and Unix-like systems, Vim "now adheres to the XDG Base Directory Specification, using $HOME/.config/vim for user configuration," according to the release notes. And Phoronix Mcites more new features: Vim 9.2 features "full support" for Wayland with its UI and clipboard handling. The Wayland support is considered experimental in this release but it should be in good shape overall... Vim 9.2 also brings a new vertical tab panel alternative to the horizontal tab line. The Microsoft Windows GUI for Vim now also has native dark mode support. You can find the new release on Vim's "Download" page.

Read more of this story at Slashdot.

07:00 EST

One-Minute Daily AI News 2/14/2026 [AI Daily News by Bush Bush]

- ChatGPT promised to help her find her soulmate. Then it betrayed her.[1]

- US military used Anthropic’s AI model Claude in Venezuela raid, report says.[2]

- Anthropic partners with CodePath to bring Claude to the US’s largest collegiate computer science program.[3]

- Google AI Introduces the WebMCP to Enable Direct and Structured Website Interactions for New AI Agents.[4]

05:00 EST

04:00 EST

Apple Patches Decade-Old IOS Zero-Day, Possibly Exploited By Commercial Spyware [Slashdot]

This week Apple patched iOS and macOS against what it called "an extremely sophisticated attack against specific targeted individuals." Security Week reports that the bugs "could be exploited for information exposure, denial-of-service (DoS), arbitrary file write, privilege escalation, network traffic interception, sandbox escape, and code execution." Tracked as CVE-2026-20700, the zero-day flaw is described as a memory corruption issue that could be exploited for arbitrary code execution... The tech giant also noted that the flaw's exploitation is linked to attacks involving CVE-2025-14174 and CVE-2025-43529, two zero-days patched in WebKit in December 2025... The three zero-day bugs were identified by Apple's security team and Google's Threat Analysis Group and their descriptions suggest that they might have been exploited by commercial spyware vendors... Additional information is available on Apple's security updates page. Brian Milbier, deputy CISO at Huntress, tells the Register that the dyld/WebKit patch "closes a door that has been unlocked for over a decade." Thanks to Slashdot reader wiredmikey for sharing the article.

Read more of this story at Slashdot.

03:00 EST

01:00 EST

00:00 EST

Additional Benefits For Brain, Heart, and Lungs Found for Drugs Like Viagra and Cialis [Slashdot]

"Research published in the World Journal of Men's Health found evidence that drugs such as Viagra and Cialis may also help with heart disease, stroke risk and diabetes," reports the Telegraph, "as well as enlarged prostate and urinary problems." Researchers found evidence that the same mechanism may benefit other organs, including the heart, brain, lungs and urinary system. The paper reviewed a wide range of published studies [and] identified links between PDE5 inhibitor use and improvements in cardiovascular health. Heart conditions were repeatedly cited as an area where improved blood flow and muscle relaxation may offer benefits. Evidence also linked PDE5 inhibitors with reduced stroke risk, likely to be related to improved circulation and vascular function. Diabetes was another condition where associations with improvement were identified... The review also found evidence of benefit for men with an enlarged prostate, a condition that commonly causes urinary symptoms.

Read more of this story at Slashdot.

Saturday, 14 February

22:00 EST

Your Friends Could Be Sharing Your Phone Number with ChatGPT [Slashdot]

"ChatGPT is getting more social," reports PC Magazine, "with a new feature that allows you to sync your contacts to see if any of your friends are using the chatbot or any other OpenAI product..." It's "completely optional," [OpenAI] says. However, even if you don't opt in, anyone with your number who syncs their contacts are giving OpenAI your digits. "OpenAI may process your phone number if someone you know has your phone number saved in their device's address book and chooses to upload their contacts," the company says... But why would you follow someone on ChatGPT? It lines up with reports, dating back to April, that OpenAI is building a social network. We haven't seen much since then, save for the Sora generative video app, which exists outside of ChatGPT and is more of a novelty. Contact sharing might be the first step toward a much bigger evolution for the world's most popular chatbot. ChatGPT also supports group chats that let up to 20 people discuss and research something using the chatbot. Contact syncing could make it easier to invite people to these chats... [OpenAI] claims it will not store the full data that might appear in your contact list, such as names or email addresses — just phone numbers. However, the company does store the phone numbers in its servers in a coded (or hashed) format. You can also revoke access in your device's settings. 09

Read more of this story at Slashdot.