"Digital complicity: The intent and embrace of problematic tech"

Joseph Reagle

2018-10

Digital Complicity

The Intent and Embrace of Problematic tech

Joseph Reagle, Northeastern University

Motivation

The zeitgeist

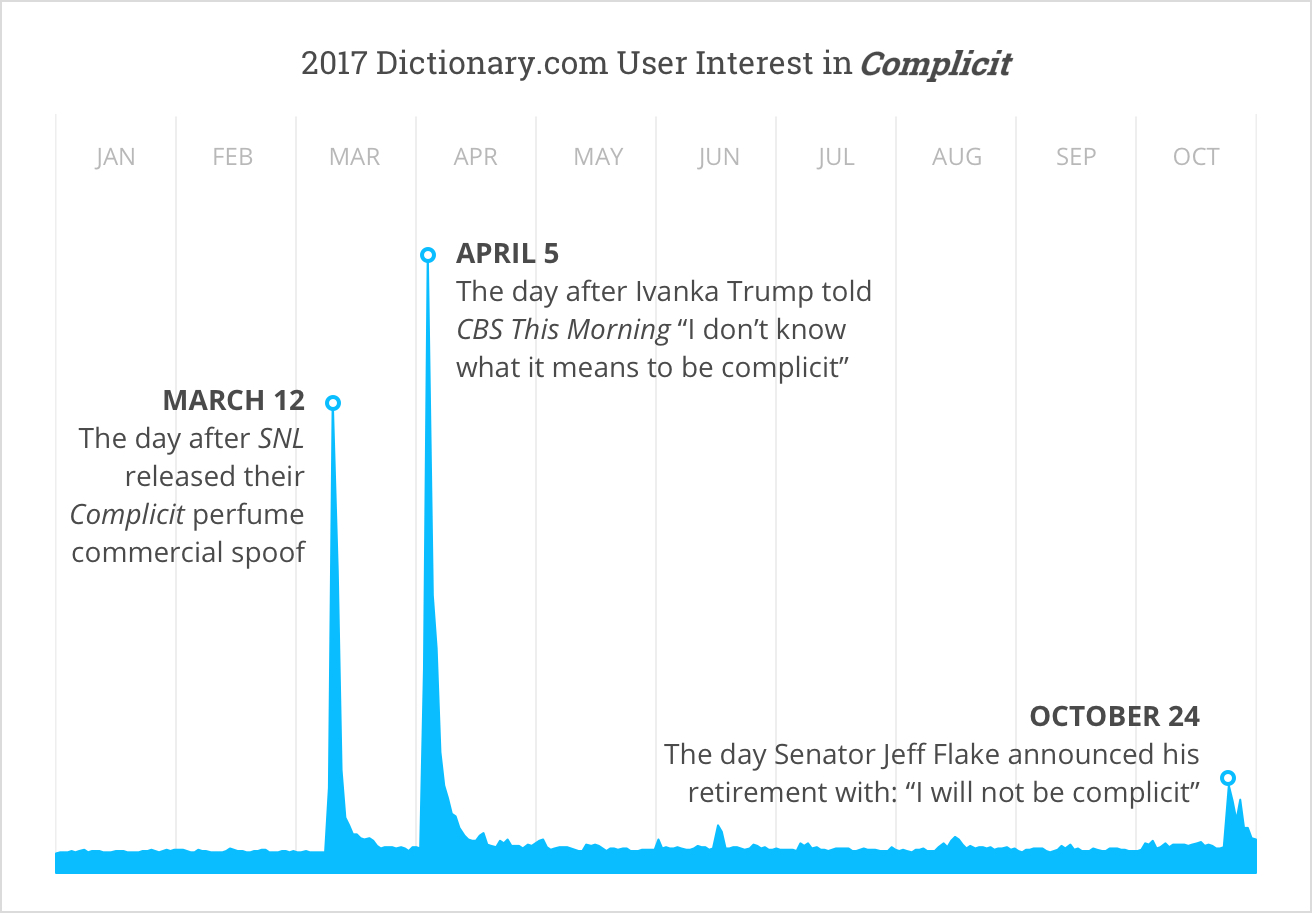

In 2017, globalism, sexist culture, and Trumpian nepotism prompted Dictionary.com to recognize complicity as their word of the year (Dictionarycom (2017)).

and Hacking Life (2019)

I usually lose a lot of things like my keys… [the implant] will give me access and help me. (Sandra Haglof in Salles (2017))

[I want to be] part of the future.

Questions of complicity

- Is Facebook complicit in spreading the fake news that roiled the 2016 election?

- Are bio-hackers who inject themselves with implants for the sake of convenience complicit in the more oppressive tracking which will follow?

- Are those who develop facial recognition technology and databases complicit when these systems are used by oppressive regimes?

These questions are about problematic technology: tech-related artifacts, ideology, and techniques that have potential to be broadly harmful.

Digital technology—and its assessment—are especially problematic.

Agenda

- A framework for complicity.

- The blameworthiness of Facebook’s intent.

- The problems of the life hacker’s embrace.

- Responses which limit complicity.

Complicity

Ancients

Who beyond the thief is obliged to compensate the victim of the theft?

Aristotle said we must consider those who contribute “by command, by counsel, by consent, by flattery, by receiving, by participation, by silence, by not preventing, [and] by not denouncing” (1917sts, II.II.7.6).

More recent theory

- Lewis (1948cr) maintained an individualistic, skeptical, and minimalist approach to collective culpability; Jasper (2000qgg) did the opposite.

- Gardner (2006cc) focused on causality and degree of difference-making in his analysis, as did Kutz (2000cel, “Complicity”).

- Mellema (2016cma) distinguished between enabling versus facilitating harm and shared versus collective responsibility.

… and applications

- Labor

- Brock (2016ccl), “Consumer complicity and labor exploitation”

- Lawford-Smith (2017dpm), “Does purchasing make consumers complicit in global labour injustice?”

- Tech (Google)

Lepora & Goodin (2013cc)

Lepora & Goodin

L&G framework

- agents

- shady non-contributors, contributors, co-principals

- acts

- connivance, contiguity, collusion, collaboration, condoning, consorting, conspiracy, and full joint wrongdoing

- dimensions

- …

Complicit Blameworthiness

CB = (RF × BF × CF) + (RF × SP)

BF(Badness Factor) of the principal wrongdoingRF(Responsibility Factor)V: voluntarinessKc: knowledge of contributionKw: knowledge of wrongness

CF(Contribution Factor)C: centrality, including essentialityProx: proximityRvse: reversibilityTemp: temporalityPr: planning roleResp: responsiveness

SP(Shared Purpose) with wrong-doers

Implications

|

Kc=0 or Kw=0 or CF=0 |

|

Kc=1 and Kw=1 and CF > 0 but V=0 |

|

Kc=1 and Kw=1 but (0<CF<1 or 0<V<1 or 0<SP<max) |

|

Kc=1 and Kw=1 and V=1 and CF=1 and SP=max |

Facebook’s intent

Fake news

Whereas complicit was Dictionary.com’s word of 2017, fake news took the honor at the Collins dictionary (Flood (2017)).

Growth guilt

I feel tremendous guilt…. The short-term dopamine driven feedback loops that we have created are destroying how society works: no civil discourse, no cooperation, misinformation, mis-truth… (Palihapitiya (2017), min. 22:30)

Why?

because [of] the unintended consequences of a network when it grows to a billion or two billion people … it literally changes your relationship with society, with each other…. (Parker in Allen (2018))

Complicit Blameworthiness

(responsibility × badness × contribution)

+

(responsibility × shared_purpose)

Let’s assume shared_purpose = 0

(responsibility × badness × contribution)

Facebook’s responsibility

L&G Responsibility Factor (RF)

- RF = f(V, Kc, Kw)

V: voluntarinessKc: knowledge of contributionKw: knowledge of wrongness

V: Voluntary?

Absolutely

Kc: Knowledge of contribution?

The inventors, creators—it’s me, it’s Mark [Zuckerberg], it’s Kevin Systrom on Instagram, it’s all of these people—understood this consciously. And we did it anyway” (Parker in Allen (2018))

Kw: Knowledge of wrongness?

I think in the back deep deep recesses of our minds we kind of knew something bad could happen, but I think the way we defined it was not like this. (Palihapitiya (2017))

“Not like this”

“The Unanticipated Consequences of Purposive Social Action” (Merton (1936)) distinguishes between:

- the unknowable

- “interplay of forces and circumstances which are so complex and numerous that prediction of them is quite beyond our reach”

- our ignorance

- for which “knowledge could conceivably be obtained” but is not

Facebook’s knowledge

- Facebook didn’t anticipate becoming a platform for propaganda.

- Nor was it their intention.

It was not as if propaganda was inconceivable (unknowable), only that they were largely ignorant to the possibility.

Negligence

duty, breach, causality, & harm

It’s clear now that we didn’t do enough to prevent these tools from being used for harm as well. That goes for fake news, foreign interference in elections, and hate speech, as well as developers and data privacy. We didn’t take a broad enough view of our responsibility, and that was a big mistake. (Zuckerberg in TimbergRomm (2018))

Consequences—expanded

- the agent’s mental state, which renders the consequence:

- unanticipated, which can result from

- unknowable circumstances, or

- ignorant disposition

- naive: simply did not consider

- negligent: unconsidered despite obligation

- contrived: avoided consideration

- unintended: the consequence is misaligned with intention

- unanticipated, which can result from

- the effect of consequence

- primary: consequence that directly follows

- byproduct: consequences which are side effects

- the valence of consequence

- positive: desirable consequences

- negative: undesirable consequences

- perverse or revenge: undesirable consequences counter to intention

- extent of consequence: ranging from nil, to a little, to a lot

Responsibility value

RF (Responsibility Factor) = f(V, Kc, Kw)

CB = (RF=0.5 × BF × CF)

Insight: Unintended and unanticipated consequences complicate the assessment of responsibility, especially with respect to knowledge and types ignorance.

Facebook’s badness

Badness Factor (BF)

It’s literally at a point now where I think we have created tools that are ripping apart the social fabric of how society works. (Chamath Palihapitiya (2017), min. 21:38)

Badness value

Palihapitiya knew “something bad could happen” but it “was not like this.”

CB = (RF=0.5 × BF=0.7 × CF)

Insight: Again, contingency and scale complicate assessment of badness, which has other issues which we will return to.

Facebook’s contribution

Contribution Factor (CF)

Facebook contributed to the spread of false information. They could’ve done better.

Contribution value

CB = (RF=0.5 × BF=0.7 × CF=0.7)

Facebook was complicit

CB = 0.25

Exoneration?

If FB didn’t succeed with its platform, someone else would’ve.

Other discussion?

Life hackers’ embrace

The embrace of technology

Life hacker’s voluntary embrace of technology (1) eases coercive imposition and (2) increases normative pressure.

Consciousness hacking

We believe that modern technology, driven by science, has an incredible (and largely unrealized) potential to support psychological, emotional, and spiritual wellbeing. (Consciousness Hacking (2016))

Coercive imposition

There is no law or regulation to limit the use of this kind of equipment in China. The employer may have a strong incentive to use the technology for higher profit, and the employees are usually in too weak a position to say no. (Qiao in Chen (2018)).

Life hacker complicities

Insight: Whereas technologies of the self are performed by the self for the self’s benefit, those of power see the self dominated and objectivized by another (Foucault (1982/1997)).

- Biohackers can strengthen the regime of surveillance.

- Productivity hackers can increase workaholism and precarity.

- Health hackers can contribute to healthism.

- Relationship hackers can further alienation.

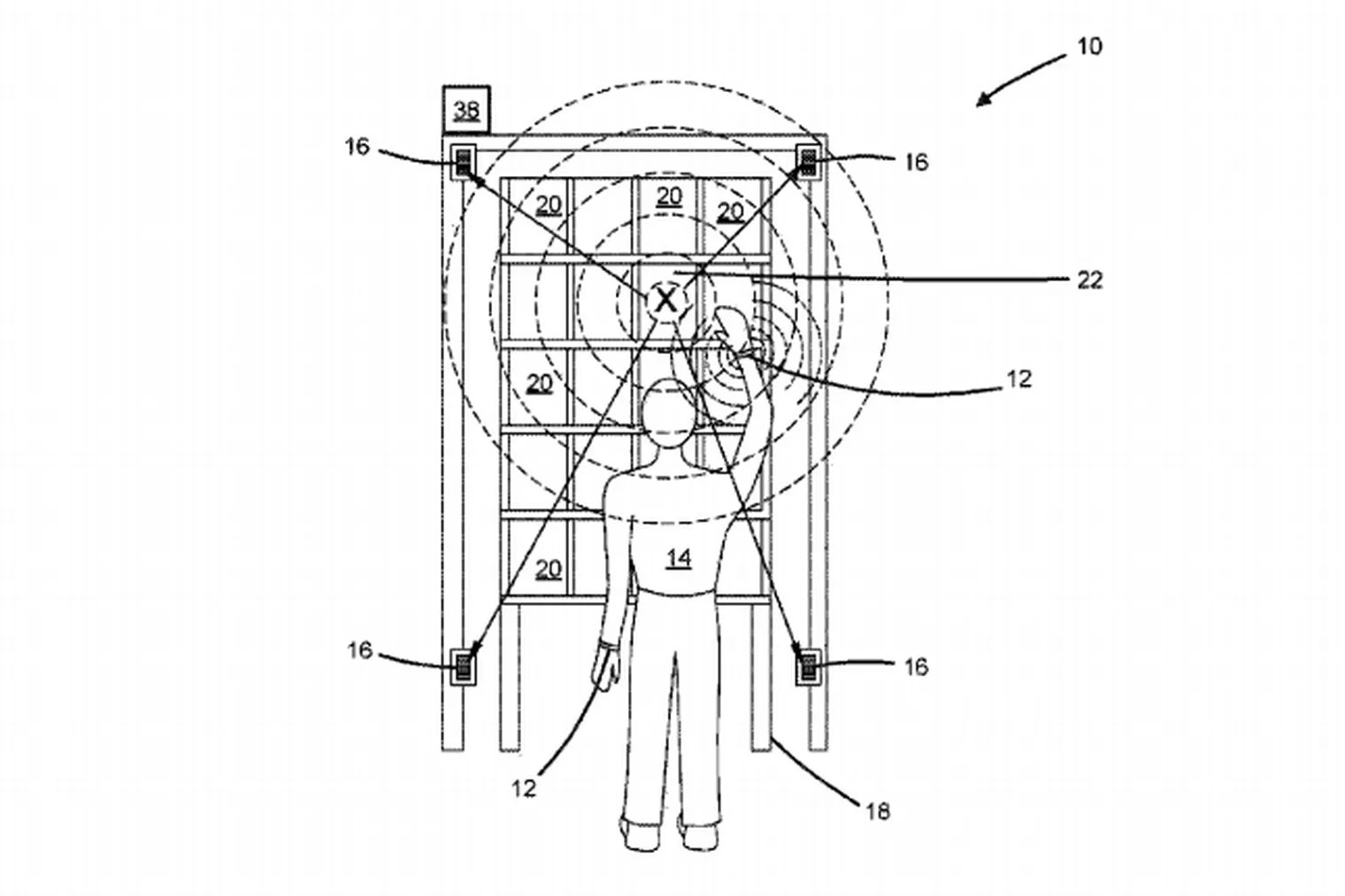

Pavlok device

Worker monitoring & augmentation

My concerns

- Market complicity in being early adopter.

- Cultural complicity in harmful norms.

Market complicity

Embracing, adopting, and testing problematic tech facilitates its deployment.

It adds grease to a slippery slope.

Cultural complicity

Little (1998css) refers to the culpability of participating in cosmetic surgery as cultural complicity, which is “when one endorses, promotes, or unduly benefits from norms and practices that are morally suspect.”

Surgeon complicity in cosmetic insecurity

- Responsibility: high

- Voluntary: yes

- Knowledge: high

- Badness: significant and certain for some

- Contribution: high

- Centrality and essentiality: high

Significant complicity. And those who encourage harmful norms and practices are “crassly complicit” (Little (1998), p. 170)

Patient complicity in cosmetic insecurity

- Responsibility: minimal

- Voluntary: yes

- Knowledge: minimal

- Badness: significant and certain for some

- Contribution: minimal

- Centrality and essentiality: minimal

Negligible complicity.

L&G on productivity hacker

- Responsibility: minimal

- Voluntary: yes

- Knowledge: negligible

- Badness: equivocal

- Contribution: minimal

- Centrality and essentiality: negligible

Negligible complicity. (Though gurus are more so.)

Equivocal badness?

Unlike many of the harms discussed in the literature, problematic tech is equivocal. Consequences differ with respect to:

- valence: good and bad

- scale: at different scales

- constituencies: affecting them differently

e.g., Pavlok wrist zapper

How to compare…

- Letting a child play with a loaded gun has a significant chance of resulting in a definite harm that affects the child and dozens of others.

- It is a probable tragedy at the micro scale.

- Facial recognition technology has good applications, but it can be abused with society-wide effects.

- It is a possible disaster at the macro scale.

Responses which limit complicity

Declaiming

- “Statement on the Intent and Use of PICS: Using PICS Well” (ReagleWeitzner (1998)).

- Google’s Pichai wrote that they will not apply AI where it is likely to cause harm, be used within weapons, violate international surveillance norms, or otherwise violate international law and human rights (Pichai (2018)).

- Microsoft’s Nadella internal memo clarified that ICE contracts were related to legacy office applications and not “any projects related to separating children from their families at the border” (Nadella quoted in Warren (2018)).

Distancing (specific)

- Googlers refuse to work on systems related to military projects, with some resigning in protest (Bergen (2018); Conger (2018); WakabayashiShane (2018)).

- 500+ MS employees called for their company to cancel any U.S. ICE contracts (Frenkel (2018)).

Distancing (general)

- 2800+ technologists pledge to “never again” build database that allow governments to “target individuals based on race, religion or national origin.” They pledge:

- minimize the collection of related data (even if not originally intended toward oppressive ends),

- destroy existing “high-risk data sets and backups,”

- resign if need be (LienEtehad (2016); Again (2017)).

- “An Oath For Programmers”: pledge to only undertake “honest and moral work” and to “consider the possible consequences of my code and actions” (Johnstone (2016)).

Opposition

- Diaspora & Mastodon are Facebook alternatives: decentralized, opposed to ads, and with higher user control.

- Some technologists oppose digital harms by creating systems that oppose or interfere with problematic technologies (BruntonNissenbaum (2015)).

- Some outside of Microsoft threatened to boycott events in solidarity with the ICE protest (Thorp (2018)).

- “Hacktivism” can be claimed as resistance: by experimenting with problematic technologies hackers better illuminate their promise and perils.

The efficacy of boycotts

Beyond the (limited) economic effect of such distancing, protests have an important role: by making a concern a topic of public discussion, protesters remove the potential for others to claim they were ignorant to their responsibility (i.e., “consciousness raising.”)

Conclusion

1. Lepora & Goodin’s framework

(responsibility × badness × contribution)

2. Facebook’s intent

- Merton’s “unanticipated consequences” is applicable, when clarified and expanded.

- Yet, assessing knowledge of unintended, unanticipated, and equivocal consequences is difficult, especially at scale.

3. Life hacker’s embrace

- Little’s “cultural complicity” is applicable.

- Yet, reckoning with the following is difficult:

- responsibility:

- tech of self ➡ tech of power

- market complicity in being early adopter

- cultural complicity in harmful norms

- badness:

- equivocal (valence of outcomes, affected constituencies)

- responsibility:

4. Responses which limit complicity

- Declaiming

- Distancing (specific & general)

- Opposition

Thank you

Appendices

Agents & acts

| Shady Non- Contributors (non-causal) |

Non-Blameworthy Complicit Contributors (causal but not responsible) |

Blameworthy Complicit Contributors (causal & responsible) |

Participating Co-principals (constitutive) |

|---|---|---|---|

|

(not voluntary or knowledgeable of of harm/role) |

|

|

Agents & the 4 “C”s

- shady non-contributors (not casual)

- connivance (tacitly assenting)

- condoning (granting forgiveness)

- consorting (close social distance)

- contiguity (close physical distance)

- non-blameworthy contributors (causal)

- blameworthy contributors (causal)

- co-principles (constitutive)

Foucault’s technologies

technologies of the self … permit individuals to effect … operations on their own bodies and souls, thoughts, conduct, and way of being, so as to transform themselves in order to attain a certain state of happiness, purity, wisdom, perfection, or immortality.

technologies of power … determine the conduct of individuals and submit them to certain ends or domination, an objectivizing of the subject; (Foucault (1982/1997), p. 225)

Hard & soft power

- hard power

- determines the conduct of the individual;

- soft power:

- shapes their conduct through norms—related to Foucault’s (1977dpb, “Discipline and punish”) notion of discipline.

Imprisoning a class of people in a cage is a technology of hard power; shaming those who leave home without a chaperon is a technology of soft power.

Technologies—expanded

- technologies of self: performed by and for the individual

- technologies of power: enacted upon the individual by others

- hard: coercively determining conduct (e.g., prison)

- soft: normatively shaping conduct (e.g., discipline)

- complicity for embracing problematic technology by…

- easing coercive imposition of hard power

- reinforcing suspect norms of soft power (i.e., cultural complicity)

- banal (acceding to soft power)

- crass (benefiting from soft power)

Kutz’s collective culpability

- complicity principle

- “I am accountable for what others do when I intentionally participate in the wrong they do or harm they cause. I am accountable for the harm or wrong we do together, independently of the actual difference I make” (Kutz (2000), “Complicity”, p. 122).

L&G’s essentiality

- essential contribution

- necessary to the harm in “every suitably nearby possible world.”

- potentially essential contribution

- a “necessary condition of the wrong occurring, along some (but not all) possible paths by which the wrong might occur” (LeporaGoodin (2013), p. 62).

Essentiality in the firing squad

- We can take the firing squad as a “consolidated wrongdoing” in which the actions of agents together constitute the harm. Each soldier, whether they shot a bullet or not, has jointly participated in the collective harm of a firing squad.

- Second, we can perform an individual assessment of essentiality in which we ask if there is a possible universe in which an individual’s actions made a difference. Because each soldier understood that his or her gun might have had a bullet, each one is potentially essential and exposed to complicity.